Overview

To support high availability and wider scaling, edgeCore can be deployed in a cluster configuration. The cluster consists of a single Admin Server and several (strictly speaking, zero or more) Content Servers.

The cluster is coordinated through the edgeCore authentication database. In a standalone server installation, the authentication database is hosted alongside the configuration database by the edgeCore process itself. In a cluster deployment, we must configure an external authentication database. For a fresh installation, this is a relatively straightforward consideration, but does involve editing the configuration file [Install_Home]/conf/custom.properties. We need to uncomment and update each of the lines specifying properties with the prefix db.auth:

# settings in custom.properties will be loaded after system/command-line properties and before defaults

db.auth.url=jdbc:mariadb://localhost:3306/edgeAuth

db.auth.devurl=jdbc:mariadb://localhost:3306/edgeAuth

db.auth.username=auth

#######################################################################################################

# The following db.auth.password will be encrypted on next startup when you set a plain text password

#######################################################################################################

db.auth.password=auth

db.auth.driver=org.mariadb.jdbc.Driver

db.auth.dialect=org.hibernate.dialect.MySQL8Dialect

#change the cache DB connection pool max from default of 20

#db.cache.poolmax=50

# uncomment this to run in secure mode

#ssl.permissive=false

# uncomment and update this if you set the trust store password

#ssl.trustStorePass=changeitWhen the edgeCore server is next started, it will connect to the specified database and initialize it with the edgeCore authentication database schema.

This same configuration must be used for all nodes in the cluster.

Basic Installation

Follow these steps to install first an Admin Server, then any number of Content Servers:

- Deploy the Admin server:

a. Perform steps as per standalone installation.

b. Update[Install_Home]/conf/custom.propertiesto point to the shared authentication database.

c. Start the Admin Server.

d. Wait until this is up and running, then log in as admin user.

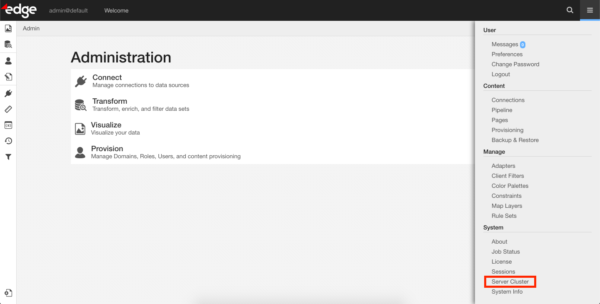

e. Navigate to System → Server Cluster:

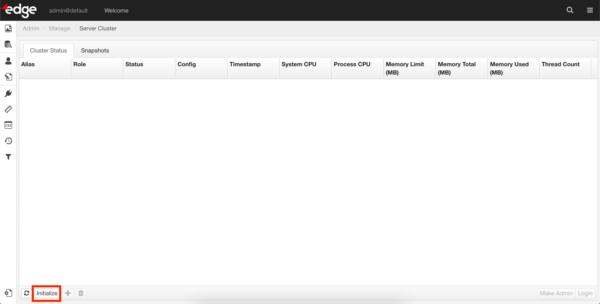

f. Initialize the cluster by clicking the ‘Initialize’ button.

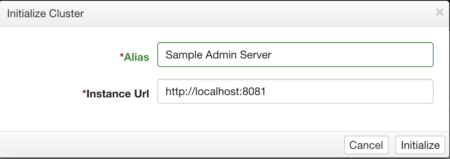

Specify a name for the Admin Server, and the URL used to access it:

- Deploy Content Servers

a. Perform steps as per standalone installation.

b. Update[Install_Home]/conf/custom.propertiesto point to the shared authentication database (can copy from Admin Server).

c. Start the Content Server.

d. Wait until this is up and running, then log in as admin user.

e. Navigate to System → Server Cluster.

f. Add the Content Server to the cluster by clicking the ‘+’ button. Specify a name for the node, and the URL used to access it.

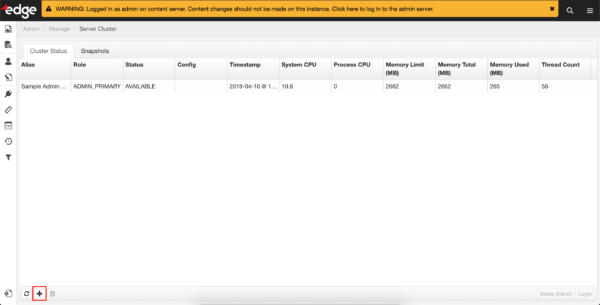

g. Wait a few moments – an initial configuration snapshot will automatically be pulled and deployed.

Cluster Upgrade

The simplest path for upgrading the edgeCore software in a cluster configuration is to use the same authentication database once upgraded. The recommended steps are:

- Make a full backup of the Admin Server.

- Copy the backup archive to a safe location.

- Stop all nodes in the cluster.

- Clear or reinitialize the authentication database.

a. For MySQL, this can be simply drop database / create database (user privileges will be unaffected). - For each node, follow the normal steps for upgrade of a standalone server, except:

a. Do not start any of the servers until the necessary configuration is in place.

b. Do not start any of the servers until the necessary configuration is in place.

c. For Content Servers, there is no need to backup/restore, as the latest cluster configuration will be pulled from the Admin Server. It is critical that local configuration is maintained though: environment.sh/bat and custom.properties need to be copied from the previous installation. - Start the Admin Server. Confirm that it is up and running.

- The cluster needs to be reinitialized – this is the same process as a fresh installation:

a. Navigate to System → Server Cluster.

b. Initialize the cluster by clicking the ‘Initialize’ button. Specify a name for the admin server, and the URL used to access it. - On the Admin Server: If you have not already, it is ok to restore the backup made with the previous version now.

- Start the Content Servers and add them to the cluster as per a fresh installation:

a. Navigate to System → Server Cluster.

b. Add the Content Server to the cluster by clicking the ‘+’ button. Specify a name for the node, and the URL used to access it.

c. Wait a few moments – an initial configuration snapshot will automatically be pulled and deployed.

Upgrade with new authentication database

If you want to leave the existing authentication database intact, it is possible to point the upgraded cluster at a new/separate database, but requires modifying the backup of the previous version’s configuration. Follow the Cluster Upgrade instructions above, except:

- Configure

[Install_Home]/conf/custom.propertiesfor each node to point to the new authentication database. - Unpack the backup archive from the previous version and copy the new custom.properties over the top of the one in the archive, then repack it. This will ensure that when you restore the backup to the new admin server, it does not overwrite custom.properties with the old configuration.

Migrating from a Standalone Server to a Cluster

This can be achieved by following the steps for Cluster Upgrade, except from and to versions are the same, and we are just nominating the existing server as the Admin Server, while adding one or more Content Servers.

Using the CLI to manage a cluster

By using the edgeCore Command Line Interface, cluster deployment can be automated, which is especially useful in container environments. This example manages a Docker-based cluster with an arbitrary number of content servers. The image has edgeCore installed at /eS and the primary server container is deployed with a persistent volume mounted on /eS/exports. The primary server automatically makes a backup to /eS/exports/latest.zip on shutdown and also saves local.properties (which contains the primary server instance id) to /eS/exports. Subsequent instantiations of the primary server use these two resources to retain identity and content. The content servers wait (if necessary) for cluster initialization and the join the cluster automatically. Content servers leave the cluster on shutdown. Other approaches are possible and may be a better fit for specific needs. The techniques can be adapted to a variety of approaches.

Initializing a Cluster

Cluster initialization is a one-time action. In this example, it is left as a manual process. Once the primary server container is started, use a direct connection (i.e. not through the load-balancer) and the admin login to install a license and initialize the cluster as mentioned in the Basic Installation section. Any content server containers will wait to join the cluster until the cluster is initialized.

Provisioning the AuthDb

Both the primary and content servers use this helper script to generate conf/custom.properties settings from environment variables:

# override the standard custom.properties by putting custom.properties in /root on the image

if [ -f /root/custom.properties ]; then

cp -p /root/custom.properties conf

fi

args[0]=-e

args[1]='s/^# (edge.cli.local.user).*/$1=admin/;'

if [ -n "$AUTH_URL" ]; then

args[${#args[@]}]=-e

args[${#args[@]}]='s/^#(db.auth.url).*/$1=$ENV{AUTH_URL}/;'

args[${#args[@]}]=-e

args[${#args[@]}]='s/^#(db.auth.devurl).*/$1=$ENV{AUTH_URL}/;'

fi

if [ -n "$AUTH_USER" ]; then

args[${#args[@]}]=-e

args[${#args[@]}]='s/^#(db.auth.username).*/$1=$ENV{AUTH_USER}/;'

fi

if [ -n "$AUTH_PASS" ]; then

args[${#args[@]}]=-e

args[${#args[@]}]='s/^#(db.auth.password).*/$1=$ENV{AUTH_PASS}/;'

fi

if [ -n "$AUTH_DRIVER" ]; then

args[${#args[@]}]=-e

args[${#args[@]}]='s/^#(db.auth.driver).*/$1=$ENV{AUTH_DRIVER}/;'

fi

if [ -n "$AUTH_DIALECT" ]; then

args[${#args[@]}]=-e

args[${#args[@]}]='s/^#(db.auth.dialect).*/$1=$ENV{AUTH_DIALECT}/;'

fi

perl -p "${args[@]}" conf/custom.properties > conf/c.p

mv conf/c.p conf/custom.propertiesRun Script for the Primary Server

#!/bin/bash

# script to start a primary server

source /root/properties-helper

cleanup() {

# save local.properties to keep primary server instance.id

if [ -f conf/local.properties ]; then

cp -p conf/local.properties exports

fi

bin/es-cli.sh backup -c -f latest

sleep 10 # give content servers time to leave cluster

# optional if orchestration will shutdown content servers first

bin/edge.sh stop

exit

}

trap 'cleanup' SIGTERM

# restore the previously saved local.properties to get our instance.id

if [ -f exports/local.properties ]; then

cp -p exports/local.properties conf

fi

bin/edge.sh start

for ((i=0; i<10; i++)); do

# use sysinfo to detect that edgeSuite is ready for commands

if bin/es-cli.sh sysinfo; then

if [ -f exports/latest.zip ]; then

bin/es-cli.sh restore -l true -t CONTENT_ONLY -f latest.zip

fi

break

fi

done

# follow all the logs so that the docker log command works nicely

tail -fn 10000 logs/*.log &

# wait for a SIGTERM

while :; do sleep 1; doneRun Script for Content Servers

#!/bin/bash

source /root/properties-helper

sleep 10 # temp hack so that admin server can create auth schema

IP=$(hostname -I | sed 's/ .*//')

echo Content server $IP starting...

cleanup() {

bin/es-cli.sh cluster -l -a $IP

bin/edge.sh stop

exit

}

trap cleanup SIGTERM

bin/edge.sh start

for ((i=0; i<10; i++)); do

# use sysinfo to detect that edgeSuite is ready for commands

if bin/es-cli.sh sysinfo; then

for ((j=0; j<3; i++)); do

if bin/es-cli.sh license -f /root/license.properties; then

break

fi

done

while :; do

for ((j=0; j<3; j++)); do

cstat=$(bin/es-cli.sh cluster -s)

if [ $? != 0 ]; then

echo Could not obtain cluster status

else

break

fi

done

if [ $? != 0 ]; then # something fatally wrong

exit 1

fi

if ! echo $cstat | grep -vq '^$'; then

echo Cluster not initialized ... waiting

for ((j=0; j<120; j++)); do

sleep 1 # so that SIGTERM interrupts are handled promptly

done

else

break

fi

done

# join cluster using our IP address as alias and URL

bin/es-cli.sh cluster -j -a $IP -u http://$IP

break

fi

done

# follow all the logs to make docker log command work nicely

tail -fn 10000 logs/*.log &

# wait for a SIGTERM

while :; do sleep 1; done