If you have a JSON file with data in key-value pair, you can parse it to get the value of any data attribute by using custom JavaScript.

Our JSON file, which you can download here, looks like this:

{

"options": {

"idx.max": {

"type": "int",

"description": "limits the maxium number of collection indexes to return for the requested presentation data"

},

"idx.list": {

"type": "list",

"description": "a comma-delimited list of collection indexes for which to fetch data (when not specified, all indexes up to idx.max will be fetched)"

},

"insert_nulls": {

"type": "boolean",

"description": "insert NULLs for all poll dates within the specified date range that do not have polled values"

},

"duration": {

"type": "string",

"description": "human readable short-hand, such as 24h, 5d, 90m where h=HOUR, d=DAY, m=MINUTE. Used to specify the amount of data to fetch"

},

"beginstamp": {

"type": "timestamp",

"description": "timestamp for the beginning of the desired range of data"

},

"endstamp": {

"type": "timestamp",

"description": "timestamp for the end of the desired range of data"

},

"fetch_indexes": {

"type": "boolean",

"description": "fetch the list of collection indexes (and their string labels, if any exist) for the date range instead of actual data. Note: if fetch_indexes is enabled, idx.max and idx.list will be ignored."

},

"hide_options": {

"type": "boolean",

"description": "hide these options"

},

"aggregate_fields": {

"type": "string",

"description": "Comma delimited list containing one or more of: std,min,max,avg,sum. "

}

},

"data": {

"0": {

"min": {

"1652745600": "1.44",

"1652832000": "1.45",

"1652918400": "1.25",

"1653004800": "1.25",

"1653091200": "1.28",

"1653177600": "1.3",

"1653264000": "1.33",

"1653350400": "1.21",

"1653436800": "1.27",

"1653523200": "1.31",

"1653609600": "1.41",

"1653696000": "1.32",

"1653782400": "1.34",

"1653868800": "1.29",

"1653955200": "1.47",

"1654041600": "1.53",

"1654128000": "1.43",

"1654214400": "1.48",

"1654300800": "1.37",

"1654387200": "1.41",

"1654473600": "1.36",

"1654560000": "1.37",

"1654646400": "1.51",

"1654732800": "1.47",

"1654819200": "1.42",

"1654905600": "1.57",

"1654992000": "1.58",

"1655078400": "1.63",

"1655164800": "1.66",

"1655251200": "1.61"

},

"max": {

"1652745600": "41.11",

"1652832000": "41.9",

"1652918400": "40.85",

"1653004800": "43.53",

"1653091200": "41.99",

"1653177600": "42.39",

"1653264000": "42.66",

"1653350400": "41.24",

"1653436800": "42.76",

"1653523200": "41.17",

"1653609600": "43.29",

"1653696000": "40.39",

"1653782400": "41.12",

"1653868800": "41.3",

"1653955200": "41.31",

"1654041600": "41.07",

"1654128000": "42.76",

"1654214400": "42.93",

"1654300800": "41.57",

"1654387200": "42.71",

"1654473600": "41.78",

"1654560000": "42.34",

"1654646400": "41.22",

"1654732800": "40.03",

"1654819200": "40.18",

"1654905600": "44.9",

"1654992000": "40.99",

"1655078400": "41.78",

"1655164800": "40.85",

"1655251200": "41.5"

},

"avg": {

"1652745600": "2.134201388888891",

"1652832000": "2.118923611111111",

"1652918400": "2.2128819444444456",

"1653004800": "1.9538541666666658",

"1653091200": "1.9008680555555562",

"1653177600": "1.9047569444444428",

"1653264000": "1.9931851851851856",

"1653350400": "2.017465277777779",

"1653436800": "1.9823958333333307",

"1653523200": "2.0069791666666683",

"1653609600": "2.1018402777777783",

"1653696000": "2.073333333333333",

"1653782400": "2.082881944444443",

"1653868800": "2.0815277777777745",

"1653955200": "2.14034722222222",

"1654041600": "2.1777777777777754",

"1654128000": "2.147881944444446",

"1654214400": "2.1532638888888904",

"1654300800": "2.123506944444444",

"1654387200": "2.1457291666666656",

"1654473600": "2.1339583333333323",

"1654560000": "2.166111111111112",

"1654646400": "2.2408333333333332",

"1654732800": "2.2234722222222225",

"1654819200": "2.2369444444444473",

"1654905600": "2.246250000000001",

"1654992000": "2.262361111111112",

"1655078400": "2.307847222222223",

"1655164800": "2.3414236111111113",

"1655251200": "2.470366492146595"

}

}

}

}

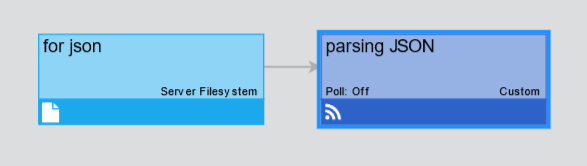

We have created a Server Filesystem connection and then a Custom feed off of it.

In the Custom feed, we have uploaded the above-mentioned JSON file, as you can see in this video.

This is the script we have used in the Script tab (downloadable here, so you can copy it easily):

function getAttributes(inputString, nodeVars, secVars) {

// TODO: Create an array of attribute objects following the sample below

// use inputString to derive attributes

var attributes = [];

attributes.push({

name : 'recordedAt', // Alphanumerics and underscores only; no leading, trailing, or adjacent underscores

type : 'date', // 'string'|'int'|'long'|'number'|'boolean'|'date'

isId : false, // true|false

units : 'seconds', // 'millis'|'seconds' (if attribute is a timestamp)

});

attributes.push({

name : 'avg', // Alphanumerics and underscores only; no leading, trailing, or adjacent underscores

type : 'number', // 'string'|'int'|'long'|'number'|'boolean'|'date'

});

attributes.push({

name : 'max', // Alphanumerics and underscores only; no leading, trailing, or adjacent underscores

type : 'number', // 'string'|'int'|'long'|'number'|'boolean'|'date'

});

attributes.push({

name : 'min', // Alphanumerics and underscores only; no leading, trailing, or adjacent underscores

type : 'number', // 'string'|'int'|'long'|'number'|'boolean'|'date'

});

return jsAttributesSuccess(attributes, "Example Success");

// can optionally return jsAttributesFailure(failureMsg) to handle failures

}

function getObjectKeys(obj) {

return Object.keys(obj);

}

function getRecords(inputString, nodeVars, secVars) {

//logger.info(JSON.parse(inputString));

var rawJsonData = JSON.parse(inputString);

//logger.info(rawJsonData.data["0"]);

//entry point

var parsedRawData = rawJsonData.data['0'];

var records = [];

var lookup = {};

var sampleRecord;

var key;

var avgData = parsedRawData.avg;

var maxData = parsedRawData.max;

var minData = parsedRawData.min;

var avgDataKeys = getObjectKeys(avgData);

var maxDataKeys = getObjectKeys(maxData);

var minDataKeys = getObjectKeys(minData);

avgDataKeys.forEach(function(key) {

// logger.info(key);

// logger.info(sampleRecord);

sampleRecord = {};

//sampleRecord = { 'recordedAt': key, 'avg': avgData[key] };

//sampleRecord.recordedAt = key;

//sampleRecord.avg = avgData[key];

sampleRecord['recordedAt'] = key;

sampleRecord['avg'] = avgData[key];

lookup[key] = sampleRecord;

records.push(sampleRecord);

});

maxDataKeys.forEach(function(key) {

lookup[key]['max'] = maxData[key];

});

maxDataKeys.forEach(function(key) {

lookup[key]['min'] = minData[key];

});

//logger.info(JSON.stringify(records));

if (records) {

return jsRecordsSuccess(records, "Example Success");

} else {

return jsRecordsFailure(records, "Failed to push data to records");

}

// can optionally return jsRecordsFailure(failureMsg) to handle failures

}

This is how the JSON is parsed in the preview: